What self-driving teaches us about implementing AI in healthcare workflows

A conversation with Will Xie, co-founder of Cosign AI

Will Xie is the co-founder and CEO of Cosign AI, a medical documentation company aiming to reduce the administrative burden for clinicians and prevent claim denials. Prior to founding Cosign, he spent six years working on self-driving at Cruise and Waymo. The following post is adapted from a conversation between Louie Cai and Will Xie.

You’ve spent the majority of your career working on self-driving, did you always want to work in that space?

I have always loved working with robots, so I earned my BS in computer engineering and my master’s in computer science. I began doing research as an undergraduate, focusing on a niche field called swarm robotics. The goal was to determine if many simple robots could perform complex tasks.

This approach challenges the conventional definition of intelligence, demonstrating how numerous less capable agents can achieve complex tasks, much like how ants solve problems in nature.

For example, imagine “seeing” without something as complex as the human eye. These robots use low-end sensors, like ultrasonic sensors, to estimate distances and detect objects through triangulation. They can also be equipped with bumpers to detect physical contacts, like Roombas. One application of this research is in rescue missions. Deploying a large number of inexpensive robots to find survivors after disasters is more effective than using a few costly robots.

How do you explain self-driving to a lay person? It seems stressful - managing speed, watching for erratic drivers, and avoiding accidents.

Essentially, self-driving is a robotics problem. People often think of robots as human-like, but a robot is anything that can perceive and interact with the world. Self-driving can be simpler than many robotics tasks because the operations are limited. You steer left or right and control speed. You rarely move backward, and you can’t get off the ground.

What’s tough is the chaotic and unpredictable driving environment. Machines struggle with edge cases, which humans handle well. So, the key challenge is decision-making, especially since errors can be fatal. There’s no plan that can account for every possible scenario. We have to make the best calls given all the uncertainties. The goal is not to be perfect but to perform better than humans, which can be difficult due to the many nuances that humans find second nature. Additionally, the driving environment is designed for humans driving with other humans.

Can you give an example of something that seems trivial but is actually difficult to solve in self-driving?

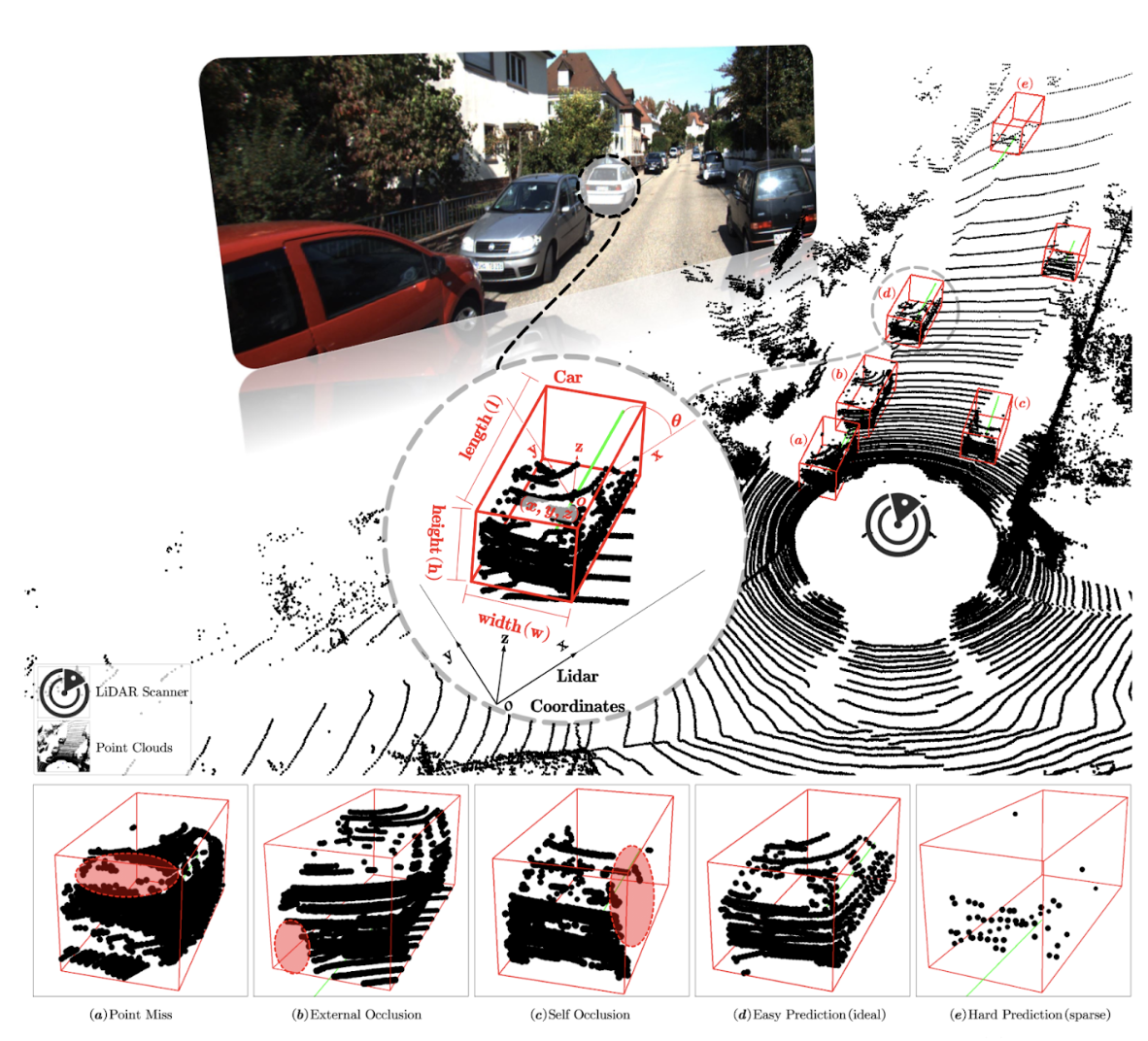

A simple street to the human eye becomes a complex probability problem for AI. Source: https://arxiv.org/pdf/2106.10823

A seemingly simple but complex problem is dealing with occlusion, when objects are partially or fully blocked from view. Full occlusion happens when an object is completely hidden, like something around a corner.

When we drive, we subconsciously make assumptions based on previous experiences for areas we cannot fully see on or off the road. Computers must also make conservative assumptions about what they can’t see, even with fewer blind spots. Occlusion influences everything, including decision-making, speed adjustment, and lane choice. For example, if a car disappears from view due to an incoming truck blocking our sight, the computer must predict whether it might reappear and when. This is a complex task that involves managing risk and uncertainty.

For humans, understanding the state of a partially occluded object—like its size, speed, and trajectory—is manageable because we use contextual information and intuition. AI achieves these tasks differently. It frequently must work with lower-fidelity data, such as camera images compared to human vision. Sometimes the signals can be conflicting, such as an object that looks like a car from the camera’s perspective but like a bike from lidar.

Over time, I started to really appreciate the level of intelligence we humans have to seamlessly stitch together all the sensory information to construct our sense of reality.

What sparked your interest in transitioning to healthcare?

My mission in life is to develop technology that improves our lives, and healthcare has always been an interest of mine. In college, my senior design project involved designing a computer vision-based solution to help the visually impaired navigate better. At that time, the only available product was a $1,000 cane with a single ultrasonic sensor that provided vibration feedback when something was close. This project was my first exposure to building solutions for healthcare and dealing with the inefficiencies in the system, even for something as simple as a modified cane. We managed to develop a prototype using stereo vision to create a depth image. The proximity of objects in each region was communicated to the user through a grid of haptic feedback vibrators on their arm. This allowed the user to have a much higher resolution understanding of the environment to avoid collisions.

Do you see parallels between health AI and self-driving?

At Cosign, we currently focus on automating documentation and information collection for clinics. Both areas involve building AI agents that can perceive and act within their environments, making decisions with imperfect information. This problem is particularly interesting to me because the degrees of freedom in what we generate for physicians involve much higher dimensions and often more ambiguous and incomplete information—in simpler terms, it's harder to get right. The “perception” problem is also more active. If we are missing some pieces of information, we can actively “call” the clinician or patient to retrieve it in order to complete the final task. These developments are very exciting, as the science was not there even a year ago.

What advantages do computers have over humans in handling tasks like documentation?

Computers excel at processing large amounts of information quickly and can maintain a more extensive long-term memory. This capability allows them to synthesize data from multiple sources effectively. However, the challenges in healthcare documentation are less about computational power and more about interpreting nuances in language and patient interactions, which are often subjective and vary widely between individuals. For instance, a computer might not capture tone or sarcasm, which are crucial in medical settings. Additionally, many things are “unsaid” in a patient encounter—from conversations held previously or with other staff members to basic mutual understandings (such as "I am the doctor, you are the patient, and your wife is here to help with your medical decision-making"). AI in healthcare also deals with occlusion, not having the full picture all the time, which makes achieving perfect accuracy challenging.

So, how are you approaching these challenges?

The key is integrating various multimodal data sources and understanding the context, which is still a significant hurdle. Current systems can transcribe speech but often miss the nuances that a human would catch. We are working towards systems that not only transcribe but also interpret the context and subtleties of human language by incorporating more robust models of probabilistic reasoning. This approach aims to improve the accuracy and relevance of the outputs, but it is always a balance between safety and usefulness. The safest thing to do would be to present the information exactly as it was collected, but that is overwhelming and not useful for clinicians. Processing the information will inevitably introduce some level of inconsistency, and we are always cognizant of this. Hallucinating key aspects of the history, diagnoses, or treatments is never acceptable.

How optimistic are you about the future of AI in healthcare?

I'm very optimistic. Advances in AI are rapidly changing what's possible, and we've already seen significant improvements that were unimaginable just a few years ago. As computational power continues to grow and we develop better models for understanding and processing human language and behavior, I believe AI will increasingly play a crucial role in enhancing healthcare efficiency and effectiveness. The potential to free up physicians' time and improve the quality of patient care is enormous. Beyond that, I see great potential in improving accessibility to care, moving beyond the necessity of visiting a human doctor for all medical needs. Imagine affordable care that’s always available and accessible from your phone.

How do you envision the future of AI in healthcare and the ultimate role for Cosign AI?

Physicians will always be limited by time when it comes to delivering optimal care for everyone. In an ideal world, spending more time with each patient would lead to better outcomes, but the reality of needing to see dozens of patients each day significantly limits this. AI and technology don't face time limitations, allowing them to handle repetitive or data-intensive tasks efficiently, which could otherwise burn out human healthcare professionals. Moreover, AI’s capabilities only improve over time.

At Cosign AI, we view the integration of AI in healthcare as augmenting rather than replacing human work—think of Tony Stark and his Iron Man suit. AI excels at processing and managing information, performing tasks like formatting notes and consolidating data, which are crucial but don't necessarily require a medical degree.

This allows physicians to dedicate more time to the aspects of their work that benefit most from human touch. In a sense, we're restoring healthcare to its founding principles—direct and meaningful human interaction. AI should be a tool that empowers physicians to return to the essence of medical care, unburdened by the administrative load that has crept into the profession over time. The future we envision at Cosign AI is one where technology serves as a powerful ally in the healthcare process, enhancing the efficiency and quality of care for everyone involved.